Best Thanksgiving ever for Google? Praise for Gemini 3, commercialization of Ironwood, and possible deal with Meta

Salesforce CEO Marc Benioff was very clear in his opinion about Gemini 3 in a recent X post: “Holy [sic]…I’ve used ChatGPT every day for 3 years. Just spent 2 hours on Gemini 3. I’m not going back. The leap is insane — reasoning, speed, images, video… everything is sharper and faster. It feels like the world just changed, again.”

It was surprising because of Benioff’s ties to OpenAI and Anthropic, but then again, OpenAI and Nvidia also chimed in with praise for Google — with Sam Altman writing, “looks like a great model,” and Jensen Huang saying, “delighted by Google’s success.”

I don’t think everyone’s turning into Mr. Rogers with the neighborly love, but perhaps feeling comfortable with their respective dominance in the LLM and hardware accelerator spaces…not to mention being partners to one another — OpenAI using Google Cloud infrastructure and custom-built TPUs for its AI workloads, and Google using NVIDIA’s GPUs to optimize software for Gemini.

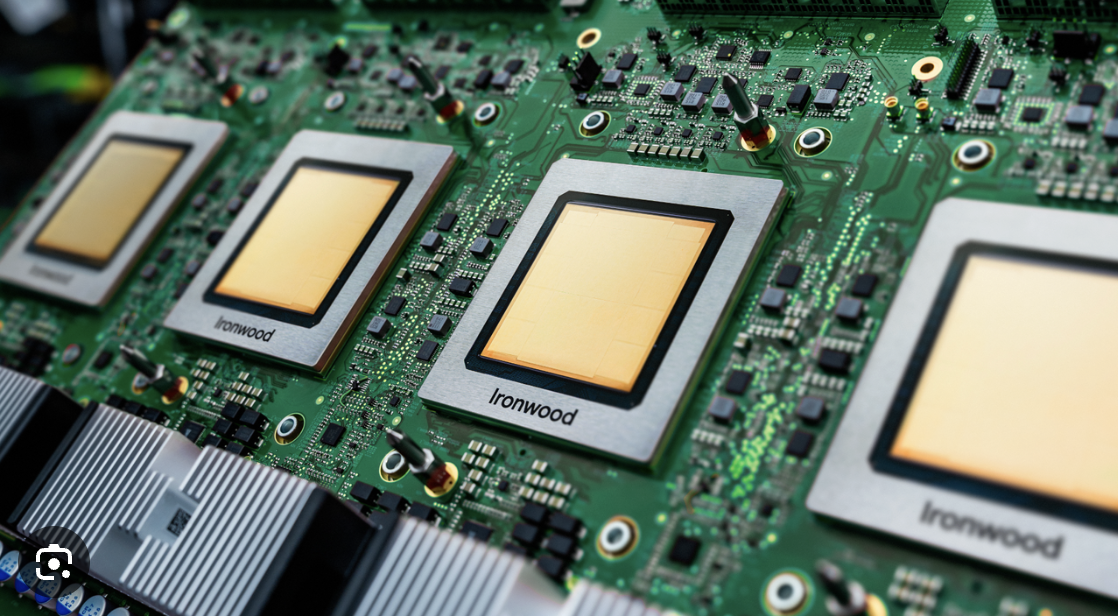

For Google, the shoutouts for Gemini 3 were icing on the cake — the cake being Ironwood, its newly commercialized TPU that may at some point directly challenge Nvidia. While Nvidia’s hold on the GPU market remains strong, it might be reasonable to expect disruption as the market shifts toward inference.

While Nvidia GPUs are the standard for AI training and inference at the moment, the enormous performance and power demands of the latter might make TPUs more attractive for power- and latency-sensitive applications and use cases. Case in point, Meta is reportedly considering switching to Google processors by 2027, so others might also consider diversifying — not only with Google, but AMD, AWS and others developing custom chips for higher throughput and efficiency, at lower price points than is possible with GPUs.

Make sure to register today for AI Infrastructure Week, a virtual forum of thought leaders and visionaries from all facets of the AI infrastructure industry. Check out our speaker list.

Susana Schwartz

Technology Editor

RCRTech

AI Infrastructure Top Stories

Converting telco tail spend into strategic advantage: CSPs can now harness AI and human insight to turn overlooked tail spend into strategic transformation, according to Nomia CEO Nick Petheram, who notes that tail spend accounts for roughly 20% of total procurement budgets while representing 80% of transactions.

Nvidia Omniverse and CUDA-X power Synopsys simulation solver: At Microsoft Ignite, Synopsys unveiled a simulation-driven solution based on Ansys Fluent, which allows manufacturers to model and optimize dynamic processes in factories.

Omdia talks about a major shift in how networks are operated and automated: Omdia’s Inderpreet Kaur tells RCR Wireless News that predictive, gen AI tools are now central to telco modernization, with AI adoption accelerating beyond customer-facing functions and becoming embedded in core network operations.

AI Today: What You Need to Know

Nvidia shares take a hit: BBC News talks to CEO of Future Horizons about whether Google Gemini 3 challenge will hit Nvidia’s AI chip dominance, especially since Meta — currently one of Nvidia’s biggest customers — is talking about renting and buying from Google.

Former Google, Meta execs focus on energy-saving DCs: Founded by former Google and Meta executives Ofer Shacham and Masumi Reynders, startup Majestic Labs has raised $100 million from Bow Wow Wave Capital the hopes of solving memory-intensive AI workload issues with “energy-saving” data centers.

Micron to invest in Hiroshima chip plant: Micron will invest $9.6 billion in Japan to build a new plant that will produce advanced high-bandwidth memory (HBM) chips. Construction at an existing site is slated for May 2026. Japan’s Ministry of Economy, Trade and Industry will provide up to 500 billion yen for the project.

Amazon earmarks $50 billion for US government AI: AWS has plans for the first commercial cloud built for US government AI and supercomputing. It will invest up to $50 billion in AI and high-performance computing (HPC) infrastructure for US government agencies, starting in 2026.

Analysts note Microsoft execs’ departures mark a setback during a critical time: Microsoft has lost two senior AI infrastructure leaders, as Nidhi Chappell, Microsoft’s head of AI infrastructure, and Sean James, a senior director of energy and data center research (heading to Nvidia), have left.

Upcoming Events

Industry Resources

- Capex in AI infrastructure spills over to test-and-measurement market as data-center performance, efficiency grow in importance

- AI gold rush meets cold reality

- AI chips hit inflection point

- DOE, FERC and PJM are attempting to balance accelerated grid development with consumer protection from skyrocketing price hikes