Table of Contents

It’s the latest in a series of major OpenAI deals

In sum – what we know:

- Deal overview – OpenAI will purchase up to 750 megawatts of computing power from Cerebras through 2028 in a deal valued at over $10 billion.

- Technology focus – The partnership utilizes Cerebras’ “wafer-scale” chips, designed to deliver inference speeds up to 15 times faster than traditional GPU systems.

- Hardware diversification– The move expands OpenAI’s “resilient portfolio” of hardware, adding to partnerships with Broadcom and AMD to reduce reliance on Nvidia.

OpenAI has inked yet another deal with a chip provider. The company has partnered up with Cerebras in a multi-year deal valued at $10 billion.

The deal is just the latest in a series of deals OpenAI has made with major hardware providers in an effort to lock up the power it thinks it will need over the next few years. It remains to be seen if, ultimately, even what it has made deals for will be enough, or if newer technologies will reduce the need for high-performance hardware.

The deal

Under the agreement, OpenAI gains access to up to 750 megawatts of Cerebras compute capacity over three years, running through 2028. Deployment will happen in phases starting this year, with full capacity ramping up in stages across the agreement window.

The focus here is latency — specifically, tightening the loop between user requests, model processing, and response generation. OpenAI’s infrastructure team sees Cerebras hardware as particularly well-suited for workloads where speed matters most: coding assistance, voice interactions, AI agents. Whether that speed advantage actually moves the needle on retention and usage patterns at scale remains to be seen, however OpenAI has claimed that faster responses help with retention.

“When you ask a hard question, generate code, create an image, or run an AI agent, there is a loop happening behind the scenes: you send a request, the model thinks, and it sends something back,” said OpenAI in its blog post announcing the deal. “When AI responds in real time, users do more with it, stay longer, and run higher-value workloads.”

The Cerebras advantage

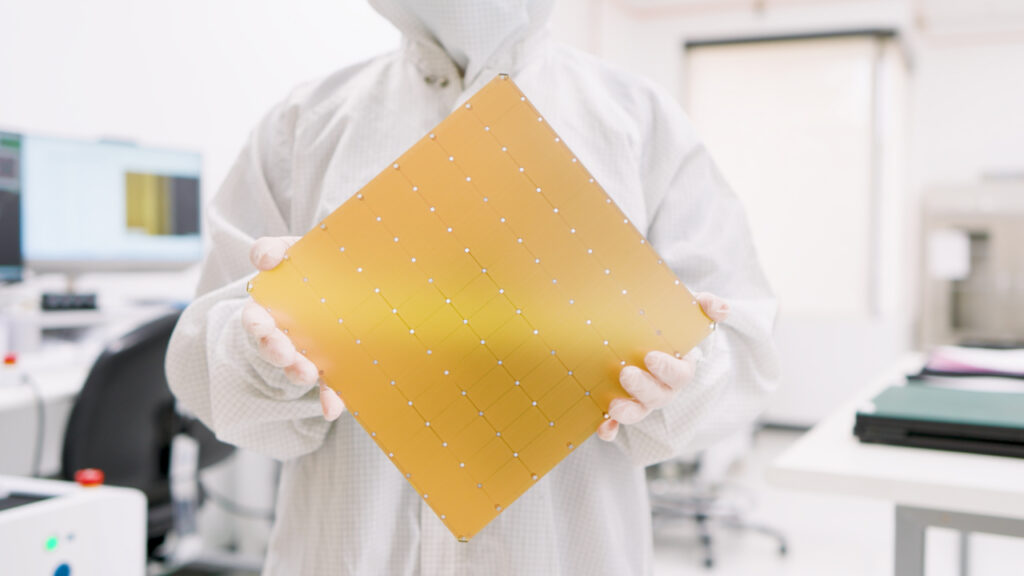

Cerebras has carved out a distinct position in the chip landscape by building processors roughly the size of a dinner plate. These “wafer-scale” chips pack compute, memory, and bandwidth onto a single piece of silicon, aiming to sidestep the data movement bottlenecks that plague traditional architectures — where information has to shuttle between separate processors and memory components.

The performance numbers Cerebras is touting are hard to ignore. The company claims inference speeds up to 15 times faster than GPU-based systems. Running OpenAI’s gpt-oss-120B model, they’ve demonstrated 3,000 tokens per second, with some tests pushing to 18 times the speed of conventional GPU setups. Worth noting, of course, is the fact that these are controlled benchmarks. How that translates across the messy diversity of real-world workloads is another question entirely. It also remains to be seen how easily Cerebras can scale manufacturing.

For Cerebras, founded around 2015, this deal could mark a turning point. The company has raised around $2 billion to date and was reportedly in discussions to add another $1 billion at a $22 billion valuation. An IPO filing went out in 2024, but Cerebras has delayed going public multiple times, choosing instead to keep raising privately. The OpenAI partnership might provide exactly the kind of validation and revenue that changes the calculus — or it might simply represent one very large customer without signaling broader market traction.

All the deals

The Cerebras agreement slots into a wider pattern of hardware diversification at OpenAI. The company has been deliberately assembling what it describes as a “resilient portfolio that matches the right systems to the right workloads,” rather than depending entirely on Nvidia GPUs.

That multi-vendor approach now includes Broadcom for custom chip design (though those chips haven’t reached production yet), AMD’s Instinct MI450 GPUs, and Cerebras for low-latency inference. Nvidia still holds a primary role, but OpenAI is clearly working to loosen that grip — or, perhaps, it simply thinks it needs more compute than Nvidia alone can provide.

This isn’t happening in isolation. Across the industry, companies are hunting for alternatives to Nvidia’s dominant position in AI compute. Intel is reportedly deep in talks to acquire SambaNova Systems for around $1.6 billion.

It’s also worth noting that Sam Altman is already a Cerebras investor, and the two companies have maintained ties since 2017, exchanging research and early-stage work. That history likely smoothed the path to this partnership — but it also raises fair questions about whether the deal reflects pure technical evaluation or benefits from existing relationships. Either way, the agreement highlights a shift in AI infrastructure more heterogeneous.